ChatGPT-parent OpenAI has acknowledged that its latest model, known as “o1,” could potentially be misused for creating biological weapons.

What Happened: Last week, OpenAI unveiled its new model, which has improved reasoning and problem-solving capabilities.

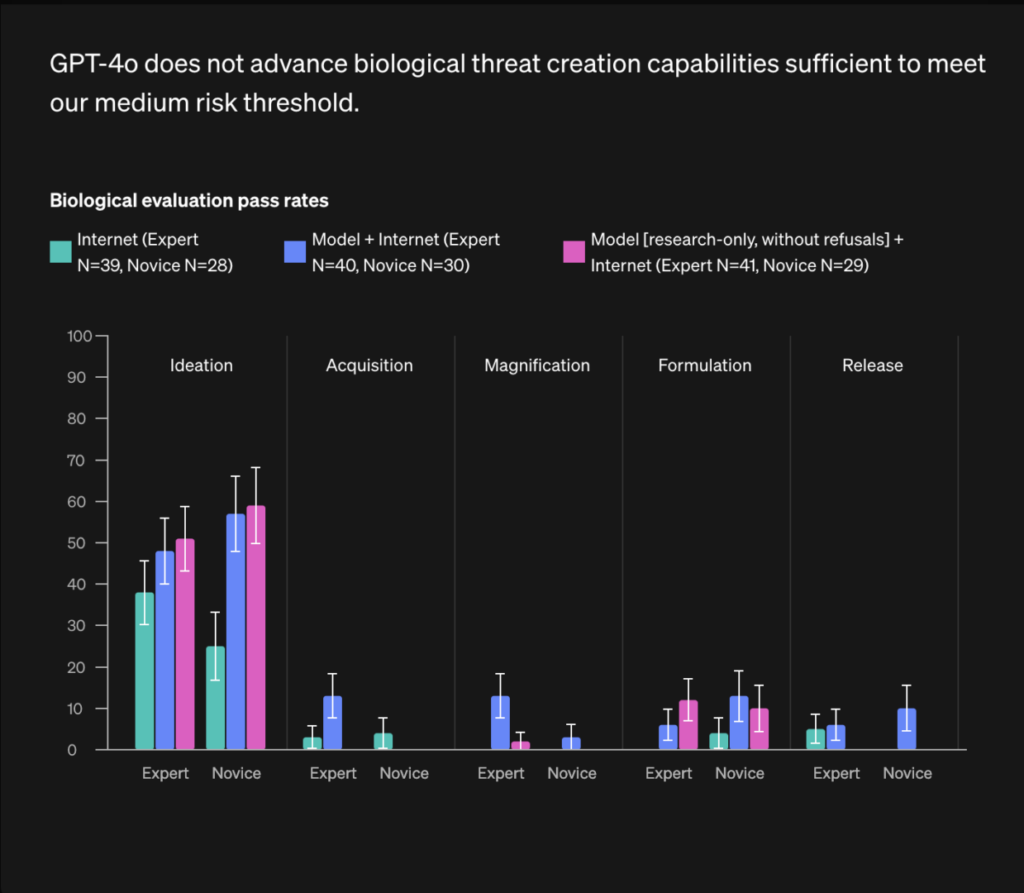

The company’s system card, a tool that explains how the AI operates, rated the new models as having a “medium risk” for issues related to chemical, biological, radiological, and nuclear (CBRN) weapons.

This is the highest risk level that OpenAI has ever assigned to its models. The company stated that this means the technology has “meaningfully improved” the ability of experts to create bioweapons, reported Financial Times.

Image Credit: OpenAI GPT-4o System Card page

OpenAI CTO Mira Murati told the report that the company is being particularly “cautious” with how it is introducing o1 to the public due to its advanced capabilities.

The model has been tested by red teamers and experts in various scientific domains who have tried to push the model to its limits. Murati stated that the current models performed far better on overall safety metrics than their predecessors.

Subscribe to the Benzinga Tech Trends newsletter to get all the latest tech developments delivered to your inbox.

Why It Matters: AI software with more advanced capabilities, such as step-by-step reasoning, poses an increased risk of misuse in the hands of malicious actors, experts warn, the report noted.

Yoshua Bengio, a leading AI scientist and professor of computer science at the University of Montreal, pointed out the urgency of legislation like California’s debated bill SB 1047. The bill would require makers of high-cost models to minimize the risk of their models being used to develop bioweapons.

In January 2024, a study showed the limited utility of its GPT-4 model in bioweapon development. The study was initiated in response to concerns over the potential misuse of AI technologies for harmful purposes.

Previously, the Center for Humane Technology co-founder Tristan Harris’s claims of Meta AI being unsafe, leading to AI-generated weapons of mass destruction, were debunked by Mark Zuckerberg in a Capitol Hill hearing.

Meanwhile, earlier it was reported that OpenAI teamed up with Los Alamos National Laboratory to explore the potential and risks of AI in scientific research.

Image via Shutterstock

Check out more of Benzinga’s Consumer Tech coverage by following this link.

Read Next:

Disclaimer: This content was partially produced with the help of Benzinga Neuro and was reviewed and published by Benzinga editors.

© 2025 Benzinga.com. Benzinga does not provide investment advice. All rights reserved.

Trade confidently with insights and alerts from analyst ratings, free reports and breaking news that affects the stocks you care about.